What Does a Data Engineer Do All Day?

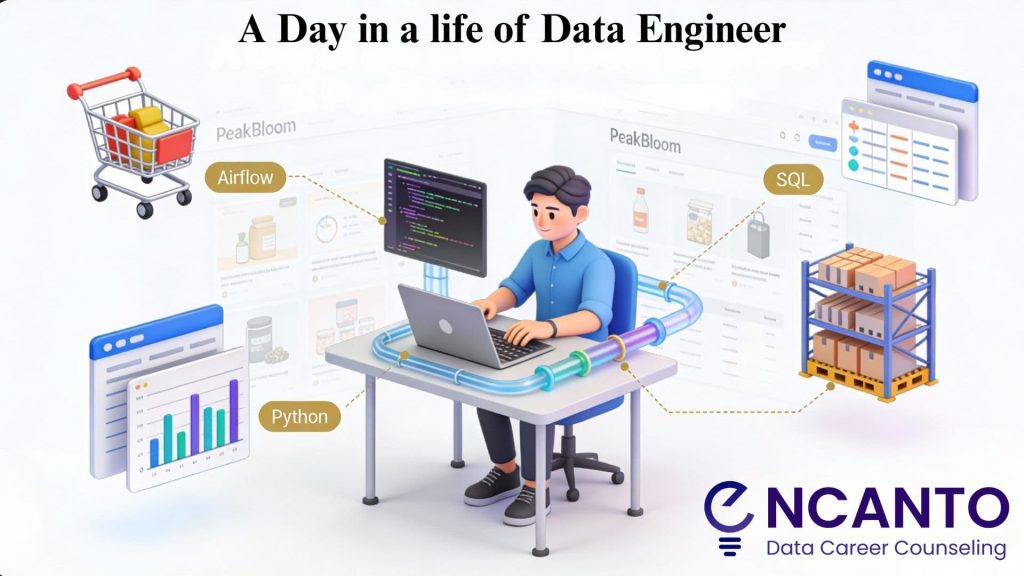

December 29, 2025Data engineers work across multiple industries like e commerce, finance, healthcare, and streaming platforms. They are the invisible builders who make sure data flows reliably so analysts and scientists can do their jobs. Whether it is tracking online orders for an e commerce site or processing patient records in healthcare, data engineers create the foundation.

In this blog, I will walk you through an average day of a data engineer at an e commerce company called PeakBloom. You will see how their work directly impacts sales, inventory, and customer experience.

8:30 AM – Checking Alerts and Overnight Pipelines

The day starts with coffee and a quick look at alerts.

At PeakBloom, thousands of orders happen every night. Data pipelines collect:

- Website clickstream data

- Orders and payments

- Inventory updates from warehouses

- Marketing data from Google Ads and Meta Ads

The data engineer, Ryan , opens the monitoring dashboard to see if all jobs ran successfully.

Today’s issue:

The pipeline that loads last night’s orders into the data warehouse failed. The business team needs this data for the daily revenue report, so this becomes the first priority.

9:00 AM- Debugging a Broken Orders Pipeline

Ryan checks the logs in their orchestration tool (Airflow). The error message says:

“Column discount_code not found.”

What happened:

The product team released a new discount feature at midnight. The orders API now includes discount_code when customers apply coupons. The pipeline’s SQL script was not updated, so the job failed.

Steps Ryan takes:

- Pulls a sample of raw order data from the source API.

- Confirms that some orders include discount_code and some do not.

- Updates the transformation logic to handle the new field safely.

After testing the change on a small batch, Ryan reruns the job and backfills the missing data.

The finance dashboard now shows complete revenue and discount usage for yesterday. The business team can trust the numbers again.

10:30 AM- Daily Standup with Data and Product Teams

Next comes a short daily standup with data engineers, data analysts, and the product manager.

Topics today:

- Orders pipeline incident and fix

- New request: “Can we track payment page drop offs?”

- Planned work: building a better inventory data pipeline

The drop off tracking becomes a mini project for later. Today, Ryan focuses on inventory.

11:00 AM- Designing an Inventory Pipeline

PeakBloom sells thousands of products. Inventory updates come from multiple warehouses and stores. If this data is wrong, customers see “in stock” but get out of stock emails later.

Ryan designs a new inventory pipeline.

Component | E Commerce Source | Target | Purpose |

Extraction | Warehouse API | Raw data table | Capture every stock change |

Transformation | Python and SQL | Clean inventory table | Remove duplicates |

Load | Warehouse to website | Real time stock view | Accurate product pages |

Key decisions:

- Run every 10 minutes so stock stays fresh.

- Prevent stock from going below zero.

- Log every adjustment for audits.

1:00 PM- Lunch and Quick Learning Break

During lunch, Ryan watches a video about warehouse query performance. Data engineers spend time learning new tricks around SQL, cloud costs, and streaming tools.

1:30 PM- Implementing the Inventory Pipeline

High level tasks:

- Create raw_inventory_events table for warehouse updates.

- Write Python job to call warehouse API.

- Use SQL to aggregate into current_inventory table.

Example table:

product_id | warehouse_id | current_stock | last_updated |

101 | W1 | 34 | 2025 12 29 12:05 |

101 | W2 | 12 | 2025 12 29 12:09 |

This feeds website stock badges and supply chain dashboards.

3:30 PM- Helping Analysts with Slow Reports

The analytics team pings: “The cart abandonment report takes 40 seconds.”

Ryan finds the issue: the report scans a year of data when most need last 30 days.

Fix:

- Add date partitioning.

- Default query to 30 days.

Result: 40 seconds to 3 seconds. Marketing can now test checkout flows faster.

4:30 PM- Documentation and Knowledge Sharing

Ryan documents:

- How the inventory pipeline works

- Assumptions made

- Table locations and refresh rates

This helps new engineers, analysts, and product managers.

5:15 PM- End of Day Checks

Ryan confirms:

- New jobs are running.

- Website shows correct stock.

- Creates task for payment drop off tracking tomorrow.

Impact: Accurate revenue, reliable inventory, faster reports, smoother shopping.

What Skills Does an E Commerce Data Engineer Use?

Skill | E Commerce Example |

SQL | Aggregating orders by discount |

Python | Calling order and inventory APIs |

Data Modeling | Orders, customers, inventory tables |

Orchestration | Nightly revenue, 10 min inventory |

Monitoring | Pipeline failure alerts |

Communication | Explaining to finance and marketing |

Why Data Engineering Matters for E-Commerce

Good data engineering means:

- Accurate stock levels

- Reliable revenue numbers

- Clean data for recommendations

- Fast dashboards for quick decisions

Is Data Engineering Right for You?

If you enjoy building systems that power online stores, solving pipeline puzzles, and working with APIs and databases, this path fits.

No CS degree needed. Start with SQL, basic Python, and curiosity.

At Encanto Data Career Counseling, build portfolio projects like e commerce order pipelines and inventory dashboards.

FAQ’s

1.Is data engineering mostly coding all day?

No, it is about 40% coding, 30% debugging pipelines, 20% designing systems, and 10% meetings and documentation. Most time goes to fixing real issues like failed order loads or slow inventory queries.

2.Do I need a computer science degree for data engineering?

No. Many e-commerce data engineers learn on the job with SQL, Python basics, and tools like Airflow. Build a portfolio project like an orders pipeline, and hiring managers care more about that than degrees.

3.What tools did Ryan use at PeakBloom?

Airflow for scheduling jobs, Python for API calls, SQL for cleaning data, and Snowflake for storage. Beginners start with free tiers of these to replicate e-commerce pipelines at home.

4.Why did the orders pipeline break in the example?

The product team added a new discount_code field to orders. Old code expected the previous format, so it failed. This shows why data engineers add flexible checks to handle changes.

5.What is the difference between data engineer and data analyst?

Analysts create dashboards and answer “why sales dropped.” Engineers build the pipelines so analysts have clean data to work with. Engineers code more, analysts visualize more.

6.How much SQL and Python do data engineers really use?

SQL daily for 70% of work (queries, tables). Python for 30% (APIs, automation). In e-commerce, SQL handles orders/inventory; Python grabs live data from Shopify or ads APIs.

Take Control of Your Data Career Today

No fluff, No distractions, Just human-to-human mentoring to help you navigate your data career path with clarity and confidence.